It's my 4th year attending Orlando Code Camp at the Seminole State College in Sanford Florida. It's a great facility for hosting the event and it now hosted on the newer wing of the campus. As I always mention, the content here is every bit on par with those of the national conference, often drawing some of the same presenters. If you are serious about furthering your knowledge, this 1x per year Saturday event is a must attend.

FYI... some of the detail within might be a bit like jotted down notes, but I just wanted to get my recollection down per session and some might be in's raw format, just to let you know.

Session 1: Unlocking the Power of Object Oriented C#, Jay Hill

To start off the day I went to an OO C# class as I always enjoy this kind of stuff. I was a tad late from registration to the class, and it's tough to complain about anything volunteer based and free, but the popularity of this event vs. the room capacity for certain session are mismatched for the 2nd straight year. I had to sit Indian style on the floor for the 1st session and I am feeling like a pretzel that needs to untwist... :(

Jay was breaking down and explaining the SOLID principal in OO development Music to my ears, "Programming to abstractions is key to OO Principals". He talked about Liskov Substitution Principal and it's polymorphic behavior is based on Interfaces. One way to violate this principal is if there is any kind of branching logic on determining concrete types. They should be replaceable.

Next he went into the Single Responsibility Rule and levels of specificity and granularity involved. You want to make sure your classes and Interfaces are not doing too much. Break them apart and use something like the Composite Pattern if needed. With this pattern you can combine (2) interfaces say of IOrder + IOrder to make CompositeOrder.

One small presenter note because I can be an armchair QB on the subject as I have attend lots of training over the years, presenters should balance leveraging snippets along with writing raw code. You don't want to loose the audience while typing variable declarations and basic code. Just use a template or jump to a completed project to get the point across.

The biggest concept of this class was detailing the power of abstractions vs. concretions and how it is unprecedented. There were some in the class that questioned if this was 'overkill' for development. This is a normal reaction to those newer to OO concepts and scalable / testable architectures. I chimed in and stated that this was not overkill and created a highly testable code source that is perfect for unit testing. It also allows for 'unplugging and plugging in' of components much easier than when using techniques of the inverse that cater to tightly coupling code which often has a reduced lifetime and difficulty being understood by follow-up developers. Good session, and good start to the day.

Session 2: ASP.NET WebAPI and SignalR for Data Services, Brian Kassay

Begin by downloading ASP.NET and Web Tools 2012.2 which updates ASP.NET Web Api, OData, SignalR, along with adding some MVC templates.

One of the coolest features I heard is the ability to paste JSON and have a .NET class created from it. I'm actually already using Web API but I wanted to see if I could fill in some information vertically. For those of you that have not used it, Web API is an ASP.NET product based heavily on MVC (but is more data centric that presentation centric) that offers HTTP RESTful services. Web API is essentially a bunch of NuGet packages, but the template will add in and make the appropriate associations, so no worries. Just use VS.NET 2012 and you will be all set. The Web API template is under MVC -> Web templates. After selecting MVC you will be able to select Web API.

Those of us familiar with Controllers in MVC will feel right at home in Web API. The HTTP verbs are mapped by convention in the controller, so no need for explicit declarations. Making a HTTP POST to a Customer controller, will automatically map to the Post() method without needed to decorate that this is a HTTP POST' method.

I'm actually knee deep in a Web API project now, so a lot of this session on the Web API part is review for me. No knock on the presenter here because he is doing a good job, but I will not regurgitate content that mostly could be found by going to ASP.NET and doing the '101' tutorials on Web API.

As typical with short session classes, the presenters cannot expand on properly architecting code and are just displaying the use of technology. Do remember if you choose to use Web API, that I would recommend treating the Web API project/layer created to only act as a presentation layer with skinny controllers. I would even advocate for not using any ViewModels or Models in this layer. Abstract that out to a Service layer instead of fattening

up essentially a presentation/UI layer with all the components of an application. Just like in MVC, the Web API layer should be treated as a presentation layer only.

To make OData calls, make sure to uncomment the 'EnableQueryable' in the .config, and add a method attribute [queryable] to allow querying on the method. Now when doing a get and adding ODATA commands like "Stop=2", "$filter=IsDone%20true", etc. and now we can query the data. This is huge because in 2 lines of code, I have the ability to query with OData. You can actually add a parameter of type ODataQueryOptions and be able to parse the Odata commands sent. One thing to note, the entire data set will be returned and then filtered on the client side. This is why it is important to apply the filtering ahead of time on the repository call so that 1TB of data is not returned. I actually need some more clarification on this because there seemed to be some confusion and a question I asked in regards to this didn't seem to be answered how I was expecting to hear it answered.

Now the SignalR content is something I needed to absorb from a '101' perspective. There are templates in ASP.NET for SignalR classes. Once the update package is installed. Web sockets might be the future, but issues with older browsers, incorrectly configured servers, etc. cause issues. SingalR has actually been absorbed by Microsoft and packaged up and available in ASP.NET. Note this is a fork off the GitHub open source version. The advantage of Microsoft's version is its supported by Microsoft and stable. SignalR actually encapsulates several different ways to keep the connection up, web sockets, server sent events, forever frame, and in a last resort effort, long polling. Long polling might be the most brute but also the most stable.

Funny break... someones GPS spoke aloud during class and stated "You have reached your destination" Little delay there because we are out 2 hours into the day!

Need to get into 'Hub Code' vs 'Persistent Connection Code' if doing anything mildly complex beyond simple strings being returned.

Not a ton of time for deep detail, but if you need something on a web app presentation that needs to have close to real time updating, look to SignalR as the best tool to solve this today.

Session 3: Chalk Talk - Code Style and Standards, Scott Dorman

Next up 'Code Style and Standards' by Scott Dorman, MVP and author of 3 books. I always enjoy gravitating to individuals this seasoned to draw on their experience.

I was one of 2 'return attendees' to this talk as I attended this last year, and since helped implement code standards at 2 different companies.

Readability and understandability are keys to writing code. Who is interpreting the code, a compiler or a human? Which one is key in making the other work well? Humans read code and it behooves us to write to a standard.

This is a tough session to apply a synopsis to because my mind wonders to all of my thoughts on this (which are right in line to Scott's views). I can and need to branch off and just write an entire post on this.

A couple of key observations, code standards must be continually updated. If you have C# code standards, do they include standards and guidelines for things like LINQ or async/await? It is important for the code standards to be a dynamic and living document.

There were conversations on how much commenting to use, how long vs. short the guidelines should be, tolls to help support enforcing coding guidelines ReSharper, StyleCop, FxCop, CodeAnalysis, MZ-Tools, etc.). Just remember there is no replacement for true code reviews. The tools are like the icing on the cake, but it doesn't enforce how the code was constructed.

If you are thinking about implementing code standards, step 1 is you MUST get management buy in. Without this it will not stand up.

This class offered way too much great dialog back and forth (much of it which I was involved in from my experiences) to remark on here. I'll have to write a post exclusively on this topic in the future.

Session 4: Unit Testing with Fakes and Mocks in VS 2012, Brian Minisi

OK looking forward to the next session which is Fakes and Mocks in VS.NET 2012. Unit testing is something I'm a huge advocate of doing and it's benefits.

He began by discussing the application life cycle and where impediments to quality can occur in that life cycle. Why unit test? Find bugs early, ensure quality of code.

Black testers are QA devs. White is Unit Testing, Gray is a combination of both.

Stubs replace a class with a substitute Shims are unit tests for code that are not able to be tested in isolation.

Test doubles isolates code under test from its dependencies. It provides state verification rather than behavior verification. Mocks verify behavior verification.

Mocks are similar to stubs. They do state verification but also do behavioral verification. Behavioral verification is "did the method underneath get called, did the properties get set as expected, did the expected number of records get returned." Behavioral verification is something newer to me in unit testing. Your verifying for example a method does what it is supposed to do internally.

Microsoft Fakes will not do behavioral verification at this point. The main thing to understand is that Mocks, Stubs, and Shims are all considered 'Fakes. Mocks have state AND Behavioral verification, and Stubs only have state verification.

Don't call out to the database to do unit tests. The data could change and it does not allow for true unit testing. Use a stub to mimic the data returned from DB.

MS Fakes is cool because the shim and stub generation or non-generation is configurable through configuration. Fakes are entire assemblies that have been faked. The stubs and shims are available on the fakes assembly within the unit tests project.

Interesting that there were comparisons using MS Fakes and make the same test using Moq.

You tell the mock what we are going to stub with (mockinstance).setup(x => x.Find(It.IsAny

Times.Between(2,4 Range.Inclusive) is testing with Moq that the method is called 2,3,or 4 times. This is an example of behavioral verification.

Shims replace functionality. For example shiming DateTime DateTime.Now, can be shimmed to always return January 15, 2013. Shims have to be within a using (ShimsContext.Create()) context within the shimmed method. Only use shims if you CAN'T use stubs. Shims are a LOT slower than stub. 500 unit tests should happen in less than 1 second. Use shims for static methods.

I know there were a lot of fragmented sentences in this one but there was a ton of good information and a lot of clarity from the standpoint of proper terminology and usage when writing unit tests. If anything, take some of the information and expand your own knowledge on the topic. I will definitely be adding posts on unit testing and Moq in the near future.

Session 5: Intro to Open Data Protocol (OData), John Wang

OData is a uniform way of structuring data like Atom or JSON. OData is not a Microsoft only format or technology. However in .NET can do OData services with WCF and Web API endpoints. Odata uses standard PUT,GET,POST,DELETE HTTP operations and typically using GET for data querying and retrieval.

The ODATA query options are extensive and are TSQL-esque in nature when thinking how to query data. OData V3 is the current version so the standard has had time to mature. Almost every platform consumes OData protocol. All of this is a part of WCF Data Services 5.0. WCF Data Services templates are under 'Data' in VS.NET. It appears v5.3 is the most current version. Note: You can download the latest version of WCF Data Services from Microsoft and it is required if you are planning to build this out using VS.NET.

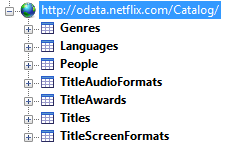

LinqPad has some FANTASTIC ability for querying the catalog available through a tree structure (see my post based on this: Use LINQPad to Query an OData Service). The tree structure is available once a valid connection is created in LinqPad. It's really cool that you can write a LINQ query and it will create the OData GET query for me. This is a great way to learn and reverse engineer the OData commands. You can also press the 'Lambda' button and see the equivalent lambda expression. Actually this is a good tool generically for converting between LINQ queries and Lambda expressions outside of anything OData related. The presenter was hitting the Netflix OData API (http:odata.netflix.com/v2/catalog/)to test as it returns OData format.

Even CodeCamp had OData exposed via odata.orlandocodecamp.com/dataservice.svc/ to allow querying of the presenters and their classes.

The querying capability on the query string is unbelievable This to me is a front runner for exposing relation data in a RESTful manner returning a common OData format.

Libraries to consume and process OData exist in JavaScript Java, AJAX, PHP, Objective C, Windows Phone, WCF Data Services, Silverlight, and many more.

When creating a WCF data service you will need to create a data model server side using EF or ADO.NET using IQueryable.

Web API has a LIMITED set of OData support and WCF Data Services has full Odata support so go with WCF if needing full Odata support.

Presenter even did a Powershell example. The point? There is a ton of support to query OData format.

Netflix entire catalog is completely exposed in OData format. Flexibility and power of OData as it is not technology or platform specific is important. Web, Mobile, Windows 8, etc. all can use same format. He had a single app getting the OData with HTML5 JS/Win8, WP7 all being able to display with no changes.

You can easily configure which entities can be readable through code on the WCF data service side (i.e. 'AllRead'). Create a SQL View in EF model if needing 'canned' function to be returned, so that the client does not always need to supply some sort of complex OData commands to query a source.

Since WCF Data Services runs atop of WCF there should be the potential to wrap in Basic Authentication to siphon the header values (user/pass) early in the WCF call stack, and apply custom authorization rules about who can see what entity. Need to verify this but it should work well. See my post on Basic Authentication in WCF (it's for REST based WCF but implementation should hold the same) for further details 'RESTful Services: Authenticating Clients Using Basic Authentication'.

One cool note about this presentation... I realized the presenter was using a Surface RT to do the presentation, with VS.NET and SQL Server. Must have some decent processing capability to run that software so well. Very cool/

Session 6: Database Design Disasters, Richie Rump (@jorriss)

Next up is Database Dev disasters The presenter is a tech geek turn PM back to tech guy (as it pays more ;))

Think of your database as your application foundation. Analogous to the foundation to a building. The application might have a shelf life of ~5 years, but the data will live. The more time we spend on our foundation, the better the application can be.

SQL Server's 1st version released by Sybase was 1989. Over 20 years of development in a robust database. He compared it to Alice's (in Wonderland) whole, that goes deeper, deeper, and deeper and you still can't know it all. Developers, don't think you can know it all because even DBAs can't. Leverage your DBAs knowledge and improve that typically adversary relationship.

NoSQL is a hot topic as they are not relational databases. However don't worry it's not going away.

Topic 1: Poor Data Types. Having the incorrect data types can cause issues in the long run including casts and inefficiencies.

char vs varchar: A char will be padded with spaces so a char 10, will pad at the end with spaces. varchar is the ACTUAL length of the data + 2 bytes. Storage in SQL server is not just disk but memory (because everything is loaded into memory in SQL).

nchar vs nvarchar: data strong points are holding UNICODE data. If you are using a phone number, don't use NVARCHAR. He deals with a 16 Terabyte data where everything is NVARCHAR makes a DBA mad. You cannot create an index on a nvarchar or nvarchar (max) and have to do a full table scan for the data which is extremely inefficient.

DONT EVER USE BIGINT (8 bytes vs 4 bytes) unless your table has more than 2 billion records. Remember that storage size is in storage, index,and memory.

No indexes on Foreign Keys (unless used properly). Might slow queries with JOINS. Will have a performance impact on Deletes. He did an example 1st with no foreign keys and just JOINS: logical reads 1226 (number of 8k page reads). Then he added a foreign key and index. The execution plan thus far was the same. It actually preformed better ~750 reads. However it added a Key Lookup to go to disk. Then he got it down to 4 page reads by creating a nonclustered index with an Include(column_name) statement. He coasted right through this so need to research more.

Don't always trust what SQL is suggesting, because often the suggestions are incorrect.

Data is stored in clustered indexes, 'Tables' are metadata representation.

He was using 'DBCC DRPCLEANBUFFERS' between schema updates.

Then he talked about the Entity-Attribute-Value pattern. It was essentially a 'Value' column that was the value equating to an 'AttributeType' column. For example Birthdate and 1/1/1980, or phone and 800-123-45678. The problem is there is no type checking. Complicated to query. I didn't like this at all and have not employed any type of dynamic columns before. He was not advocating for this just showing challenges with it.

Pivot querying is syntactical sugar and are slow on billion row queries.

GUIDS (as a clustered index) are 16 bytes which is 2x as big as BIGINT which is already a no,no. They also suffer from fragmentation on disk. They are not good candidates for primary keys. Space used 12mb vs 33mb on disk. int 0.5% fragmentation. GUIDS are 99% fragmentation. When you create primary key SQL server automatically makes a clustered index. So yes, using GUIDs as primary key is not good. The answer was to use a sequential GUID. Fragmentation goes down to 0.6%. Size problem still exists but fragmentation is solved. Google 'primary key fragmentation GUID' for more info.

Surrogate keys/no alternate key. Surrogate keys are a good thing and he uses in everything. (NOT NULL IDENTITY(1,1)) simple. Problem is same record can be inserted 2x. By adding an alternate key (user_id and password) you will not be able to insert the same data 2x.

Document your decisions no matter which decision you make. Wiki, document, ER Studio, Irwin, whatever. Just document your decisions.

Read 'Data Model Resource Book' vol 1-3. Very good resources (manufacturing, healthcare, etc.)

Session 7: Slicing Up The Onion Architecture, Jack Pines (@bidevadventures)

Slicing up the Onion Layer Architecture to wrap up the day. This has been like all of the other years in that I can't believe it is already 4:00 and the day's almost done.

SOLID -> Software development is not a Jenga Game. This is what happens when piling crap on, and then trying to pull something out from the bottom.

He started out by talking about a common 3 layer architecture, and he showed an awesome slide which shows how much spaghetti code can spawn from this. Onion architecture focuses much like DDD on the domain layer which rarely changes in combination with DI which stages flexibility to switch things out. However in a 3 layer architecture, the UI has to know about the business layer, which has to know about the data layer, which in essence means the UI still understands and knows about the data layer. It's tightly coupled and actually it's transitively tightly coupled.

He had some fantastic slides with visuals on his architecture. Here is a link to the slides from his presentation. One showed focus as the domain being the core and everything is a round (onion) layer around the core. I saw the separation of domain services and application services which is something I'm personally interested in. Inner layers define interfaces, outer layers implement them. The most important code, your business models and logic are your core. Only take a dependency in the core.

His goal was to prove this whole IoC DI layering thing is not over architecting but a simple, straight forward, and easy to understand architecture.

Take a look at the 'Templify' product for creating different templates of applications. Can get his templates which I personally would be interested in looking at.

This architecture proves how you can switch out UIs easily. He chose MVC because of its support for IoC containers, but you could use anything. The core domain is the focus.

He took a step by step approach for converting his empty template into the onion architecture. He was highlighting how easy it is to move away from concretions to abstractions. And indeed it is very easy.

His main difference between some of the authors of the onion architecture and his implementation is that they were really opinionated about specific technologies (nHibernate, Castle Windsor, etc.) where as he wanted to simplify things and get folks to focus on the architecture. I like this attitude; no architecture should be focuses on specific technologies.

IoC containers are a great way to get away from concretions which by nature is making code tightly coupled.

He was a fan of BDD over TDD. He did not go too deep into this, but I write this comment just to give a feel for what others are doing in the industry.

He talked about using the strategy pattern (no if or case statements) and allows for true polymorphic behavior decided by the user at runtime (which service: Bing or Google).

I highly recommend to look at the referred materials in the links on his site: http://www.jackpines.info/

Orlando Code Camp 2013 Wrap Up!

The amazing thing to me is how quickly a Saturday goes by each time I go to Code Camp. I always feel satisfaction after the day is done because of the ample amount learned, the interactions with peers, and good conversations that are spawned. I want to thank all of the Code Camp volunteers as they do an excellent job year in and year out. I am already looking forward to 2014!