If you are fortunate enough to use the highest offered VS.NET edition inclusive of additional testing abilities, or purchased a license to a product such as dotCover then you already have access to unit testing code coverage tools. However there is still an easy and powerful way to get the same type metrics using a combination of msbuild.exe and (2) open source tools: OpenCover and ReportGenerator.

OpenCover will leverage msbuild.exe and analyze code to determine the amount of code coverage your application has in reference to the unit tests written against it. ReportGenerator will then leverage those results and display them in a .html report output that is generated. The really cool part of it all is that since it is all scriptable, you could make the output report an artifact of a Continuous Integration (CI) build definition. In this manner you can see how a team or project is doing in reference to the code base and unit testing after each check-in and build.

A quick word on what this post will not get into - What percentage is good to have that indicates the code has decent coverage? 100%? 75%? Does it matter? The answer is, it depends and there is no single benchmark to use. The answer lies in the fact that one should strive to create unit tests that are are meaningful. Unit testing getters and setters to achieve 100% code coverage might not be a good use of time. Testing to make sure a critical workflow's state and behavior are as intended are examples of unit tests to be written. The output of these tools will just help highlight any holes in unit testing that might exist. I could go into detail on percentages of code coverage and what types of tests to write in another post. The bottom line - just make sure you are at least doing some unit testing. 0% is not acceptable by any means.

Prior to starting, you also can get this script from my 'BowlingSPAService' solution in GitHub within the BowlingSPA repository. You can clone the repository to your machine and inspect or run the script. Run the script as an Administrator and view the output.

BowlingSPA GitHub

Download and import the following (2) open source packages from NuGet into your test project. If you have multiple test projects, no worries. The package will be referenced in the script via its 'packages' folder location and not via any specific project. The test projects output is the target of these packages.

OpenCover - Used for calculating the metrics

Report Generator - Used for displaying the metrics

The documentation you'll need to refer to most is for OpenCover. It's Wiki is on GitHub and can be found at the location below. ReportGenerator doesn't need to be tweaked so much as it really just displays the output metrics report in HTML generated by OpenCover. This was my guide for creating the batch file commands used in this article.

OpenCover Wiki

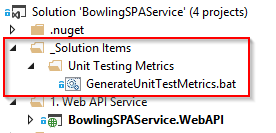

I prefer to place these types of artifacts in a 'Solution Folder' (virtual folder) at the root to be easily accessible.

The main pieces to point out here are the following:

The main pieces to point out here are the following:

The main pieces to point out here are the following:

a. Create an output directory for the report if it doesn't already exist

a. Remove previous test execution files to prevent overwrite issues

b. Remove previously created test output directories

c. Run all sections together synchronously ensuring each step finishes successfully before proceeding

Upon loading the report you can see immediately in percentages how well the projects are covered. As one can see below I have a decent start to the coverage in the pertinent areas of BowlingSPAService, but the report shows I need some additional testing. However, this is exactly the tool that makes me aware of this void. I need to write some more unit tests! (I was eager to get this post completed and published before finishing unit testing) :)

Prior to starting, you also can get this script from my 'BowlingSPAService' solution in GitHub within the BowlingSPA repository. You can clone the repository to your machine and inspect or run the script. Run the script as an Administrator and view the output.

BowlingSPA GitHub

1. Download NuGet Packages:

Download and import the following (2) open source packages from NuGet into your test project. If you have multiple test projects, no worries. The package will be referenced in the script via its 'packages' folder location and not via any specific project. The test projects output is the target of these packages.

OpenCover - Used for calculating the metrics

Report Generator - Used for displaying the metrics

The documentation you'll need to refer to most is for OpenCover. It's Wiki is on GitHub and can be found at the location below. ReportGenerator doesn't need to be tweaked so much as it really just displays the output metrics report in HTML generated by OpenCover. This was my guide for creating the batch file commands used in this article.

OpenCover Wiki

2. Create a .bat file script in your solution

I prefer to place these types of artifacts in a 'Solution Folder' (virtual folder) at the root to be easily accessible.

3. Use the following to commands to generate the metrics and report

a. Run OpenCover using mstest.exe as the target:

Note: Make sure the file versions in this script code are updated to match whatever NuGet package version you have downloaded.

"%~dp0..\packages\OpenCover.4.5.3723\OpenCover.Console.exe" ^ -register:user ^ -target:"%VS120COMNTOOLS%\..\IDE\mstest.exe" ^ -targetargs:"/testcontainer:\"%~dp0..\BowlingSPAService.Tests\bin\Debug\BowlingSPAService.Tests.dll\" /resultsfile:\"%~dp0BowlingSPAService.trx\"" ^ -filter:"+[BowlingSPAService*]* -[BowlingSPAService.Tests]* -[*]BowlingSPAService.RouteConfig" ^ -mergebyhash ^ -skipautoprops ^ -output:"%~dp0\GeneratedReports\BowlingSPAServiceReport.xml"

The main pieces to point out here are the following:

- Leverages mstest.exe to target the 'BowlingSPAService.Tests.dll' and send the test results to an output .trx file. Note: you can chain together as many test .dlls as you have in your solution; you might certainly have more than 1 test project

- I've added some filters that will add anything in the 'BowlingSPAService' namespace, but also removing code in the 'BowlingSPAService.Tests' namespace as I don't want metrics on the test code itself or for it to show up on the output report. Note: these filters can have as many or few conditions you need for your application. You will after getting familiar with the report probably want to remove auto-generated classes (i.e. Entity Framework, WCF, etc.) from the test results via their namespace.

- Use 'mergebyhash' to merge results loaded from multiple assemblies

- Use 'skipautoprops' to skip .NET 'AutoProperties' from being analyzed (basic getters and setters don't require unit tests and thus shouldn't be reported on the output)

- Output the information for the report (used by ReportGenerator) to 'BowlingSPAServiceReport.xml'

b. Run Report Generator to create a human readable HTML report

"%~dp0..\packages\ReportGenerator.2.1.5.0\ReportGenerator.exe" ^ -reports:"%~dp0\GeneratedReports\BowlingSPAServiceReport.xml" ^ -targetdir:"%~dp0\GeneratedReports\ReportGenerator Output"

The main pieces to point out here are the following:

- Calls ReportGenerator.exe from the packages directory (NuGet), providing the output .xml report file genrated from #3(a) above, and specifying the output target directory folder to generate the index.htm page.

- The report creation directory can be anywhere you wish, but I created a folder named 'ReportGenerator Output'

c. Automatically open the report in the browser

start "report" "%~dp0\GeneratedReports\ReportGenerator Output\index.htm"

The main pieces to point out here are the following:

- This will open the generated report in the machine's default browser

- Note: if IE is used, you will be prompted to allow 'Blocked Content.' I usually allow as it provides links on the page with options to collapse and expand report sections.

4. Stitch together all the sections into a single script to run

REM Create a 'GeneratedReports' folder if it does not exist if not exist "%~dp0GeneratedReports" mkdir "%~dp0GeneratedReports" REM Remove any previous test execution files to prevent issues overwriting IF EXIST "%~dp0BowlingSPAService.trx" del "%~dp0BowlingSPAService.trx%" REM Remove any previously created test output directories CD %~dp0 FOR /D /R %%X IN (%USERNAME%*) DO RD /S /Q "%%X" REM Run the tests against the targeted output call :RunOpenCoverUnitTestMetrics REM Generate the report output based on the test results if %errorlevel% equ 0 ( call :RunReportGeneratorOutput ) REM Launch the report if %errorlevel% equ 0 ( call :RunLaunchReport ) exit /b %errorlevel% :RunOpenCoverUnitTestMetrics "%~dp0..\packages\OpenCover.4.5.3723\OpenCover.Console.exe" ^ -register:user ^ -target:"%VS120COMNTOOLS%\..\IDE\mstest.exe" ^ -targetargs:"/testcontainer:\"%~dp0..\BowlingSPAService.Tests\bin\Debug\BowlingSPAService.Tests.dll\" /resultsfile:\"%~dp0BowlingSPAService.trx\"" ^ -filter:"+[BowlingSPAService*]* -[BowlingSPAService.Tests]* -[*]BowlingSPAService.RouteConfig" ^ -mergebyhash ^ -skipautoprops ^ -output:"%~dp0\GeneratedReports\BowlingSPAServiceReport.xml" exit /b %errorlevel% :RunReportGeneratorOutput "%~dp0..\packages\ReportGenerator.2.1.5.0\ReportGenerator.exe" ^ -reports:"%~dp0\GeneratedReports\BowlingSPAServiceReport.xml" ^ -targetdir:"%~dp0\GeneratedReports\ReportGenerator Output" exit /b %errorlevel% :RunLaunchReport start "report" "%~dp0\GeneratedReports\ReportGenerator Output\index.htm" exit /b %errorlevel%This is what the complete script could look like. It adds the following pieces:

a. Create an output directory for the report if it doesn't already exist

a. Remove previous test execution files to prevent overwrite issues

b. Remove previously created test output directories

c. Run all sections together synchronously ensuring each step finishes successfully before proceeding

5. Analyze the output

Upon loading the report you can see immediately in percentages how well the projects are covered. As one can see below I have a decent start to the coverage in the pertinent areas of BowlingSPAService, but the report shows I need some additional testing. However, this is exactly the tool that makes me aware of this void. I need to write some more unit tests! (I was eager to get this post completed and published before finishing unit testing) :)

By selecting an individual class, you can see a line level coverage visually with red and green highlighting. Red means there isn't coverage, and green means there is coverage. This report and visual metric highlights well when unit tests have probably only been written for 'happy path' scenarios, and there are unit test gaps for required negative or branching scenarios. By inspecting the classes that are not at 100% coverage, you can easily identify gaps and write additional unit tests to increase the coverage where needed.

Upon review you might find individual 'template' classes or namespaces that add 'noise' and are not realistically valid targets for the unit testing metrics. Add the following style filters to the filter switch targeting OpenCover to remove a namespace or a single class respectively:

That's all you should need to get up and running to generate unit test metrics for your .NET solutions! As mentioned previously, you might want to add this as an output artifact to your CI build server and provide the report link to a wider audience for general viewing. There are also additional fine tuning options and customizations you can make so be sure to check out the OpenCover Wiki I posted previously.

Upon review you might find individual 'template' classes or namespaces that add 'noise' and are not realistically valid targets for the unit testing metrics. Add the following style filters to the filter switch targeting OpenCover to remove a namespace or a single class respectively:

- -[*]BowlingSPAService.WebAPI.Areas.HelpPage.*

- -[*]BowlingSPAService.WebApiConfig

That's all you should need to get up and running to generate unit test metrics for your .NET solutions! As mentioned previously, you might want to add this as an output artifact to your CI build server and provide the report link to a wider audience for general viewing. There are also additional fine tuning options and customizations you can make so be sure to check out the OpenCover Wiki I posted previously.