So you are all excited and just got into this jQuery thing! You build your code, run the ASP.NET site, and IT IS SO Cool...wait. You look down and see a JavaScript error in the status bar of the browser indicating the following:

"Object Expected"

Well let's begin by stating this is about a generic error message as they come and there could be 1 million different reasons for it. However if you just got into writing jQuery and are running into this error, then odds are you have not properly referenced the jQuery scripts for your application to use. I hope at least you know there are scripts that must be incuded. If you are thinking "No I didn't know", then you need to go back to jQuery 101 videos on setup.

However getting the scripts properly added to your ASP.NET application does have some nuances which make it easy to mess them up. The most common pitfall is if you have a ASP.NET site that uses a MasterPage. If this is the case, then your content pages are probably not adding the scripts individually, and the most appropriate place to register them in in the MasterPage's <Head> block.

You have (2) main options for referencing the jQuery scripts: download the scripts and include them with your project or from a CDN (Content Delivery Network) (i.e. Google or Microsoft). The upside to using a CDN is you do not have to worry about downloading the scripts into a folder and then finding that perfect path in the MasterPage to reference them. The downside is if you were working in an Intranet environment and outside access was limited or denied, then you would not be able to dynamically access those scripts.

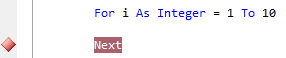

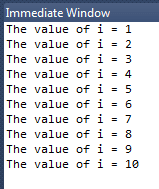

Regardless, the 1st step in seeing if the original error described above is caused by improperly added scripts, let's go ahead and reference the jQuery scripts from Microsoft's CDN like displayed below:

<script src="http://ajax.aspnetcdn.com/ajax/jQuery/jquery-1.5.1.js" type="text/javascript"></script>

If using the script reference from the CDN above fixed the issue then great! If you want, you can leave it like that and be done. If you still want to download the scripts and have them within your project to be referenced, then we have to dig a bit deeper as to the referenced path you use for the scripts.

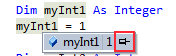

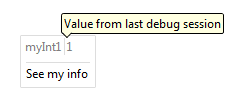

Odds are your jQuery scripts are in a folder named 'Scripts', your pages in another folder named 'Pages' and maybe you have multiple levels of organizing your code files making it difficult sometimes for the proper path to be resolved. One of the BIGGEST pitfalls I see is using the Intellisense dialog when pressing the "=" sign after 'src' in a tag and navigating to the files/scripts manually. Well you would think VS.NET would give you the proper path, correct? Not always. In fact it will use a dot-dot notation (../Path/File) which seems proper, but at runtime does not resolve correctly.

In VS.NET there are going to be a few ways you can reference a path to a file and this is where things get confusing sometimes. Let's look at the (4) main ways to reference a path from the page's source:

(1.) use a relative path: using a series of backslashes "\" you can provide a path in reference or relative to your current location.

<script src="/Scripts/jquery-1.4.4.js" type="text/javascript"></script>

(2.) 'dot-dot' notation: this indicates, "Navigate up to the parent directory" from the path provided.

<script src="../Scripts/jquery-1.4.4.js" type="text/javascript"></script>

(3.) tilde (~) character: this represents the root directory of the application in ASP.NET. The caveat is it can only be used in conjunction with server controls *not* script tags (without some server-side code help shown below). This method is not going to work well by default.

<script src="~/Scripts/jquery-1.4.4.js" type="text/javascript"></script>

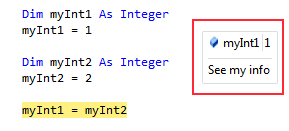

(4.) ResolveURL (System.Web.UI) method: This is a server-side command to convert a URL into one that is usable on the requesting client. Notice the use of the server-side code escape tags so this method can be called. This is the method I prefer and dynamically resolves the URL to the proper path. I recommend using it if you are going to reference local project script files.

<script src='<%# ResolveUrl("~/Scripts/jquery-1.4.4.min.js") %>' type="text/javascript"></script>My recommendation is to either reference the scripts from a reputable CDN like Google or Microsoft, or use Option # 3 above with the 'ResolveUrl' method. This will ensure your custom JavaScript and jQuery files are properly registered with your ASP.NET application.

There are some good resources for explaining how to resolve path's in MasterPages and in traditional pages. Below I have included some links if you would like to investigate further or bookmark for reference.

Avoiding problems with relative and absolute URLs in ASP.NET

URLs in Master Pages

Directory path names

Microsoft Ajax Content Delivery Network