.csproj file updates

Unable to find 'EngineAnalyticsWebApp.TestLazy.dll' to be lazy loaded later. Confirm that project or package references are included and the reference is used in the project" error on build

Unable to find 'EngineAnalyticsWebApp.TestLazy.dll' to be lazy loaded later. Confirm that project or package references are included and the reference is used in the project" error on build

Status 403: The request is not authorized to perform this operation using this permission

Here is a sample of code that might throw this error in which the startsOn value is set to the current time:

Before you start double checking all of the user permissions in Azure and going through all the Access Control and Role Assignments in Blob Storage (assuming you do have them configured correctly), this might be a red herring for a different issue with the startsOn value for the call to GetUserDelegationKeyAsync(). The problem is likely with clock skew and differences in times from the server and client.

It is documented in SAS best practices from the Azure docs (found here), the following issue with clock skew and how to remedy the issue:

Be careful with SAS start time. If you set the start time for a SAS to the current time, failures might occur intermittently for the first few minutes. This is due to different machines having slightly different current times (known as clock skew). In general, set the start time to be at least 15 minutes in the past. Or, don't set it at all, which will make it valid immediately in all cases. The same generally applies to expiry time as well--remember that you may observe up to 15 minutes of clock skew in either direction on any request. For clients using a REST version prior to 2012-02-12, the maximum duration for a SAS that does not reference a stored access policy is 1 hour. Any policies that specify a longer term than 1 hour will fail.

There is a simple fix for this as instructed to modify the startsOn value to be either not set or 15 minutes (or greater) in the past. With this updated code, the 403 error is fixed and will proceed as expected.

New-Service -Name "MyWindowsService" -BinaryPathName "C:\SomePath\1.0.1\MyWindowsService.exe"

Status Name DisplayName

------ ---- -----------

Stopped MyWindowsService MyWindowsService

"The following exception occurred while retrieving the string: ""Exception calling ""ToString"" with ""0"" argument(s): ""There is no Runspace available to run scripts in this thread. You can provide one in the DefaultRunspace property of the System.Management.Automation.Runspaces.Runspace type. The script block you attempted to invoke was: $this.ServiceName""""", System.Object,System.ServiceProcess.ServiceController,System.WeakReference`1[System.Management.Automation.Runspaces.TypeTable], System.Management.Automation.PSObject+AdapterSet,None,System.Management.Automation.DotNetAdapter,"",System.Management.Automation.PSObject+AdapterSet, System.ServiceProcess.ServiceController,System.ServiceProcess.ServiceController,"PSStandardMembers {DefaultDisplayPropertySet}",False,False,False,False,False, "","",NoneOK not very helpful. It took a while but I deduced the output from the New-Service cmdlet was causing this issue. There are 2 ways I found to handle this.

New-Service -Name "MyWindowsService"

-BinaryPathName "C:\SomePath\1.0.1\MyWindowsService.exe" | out-null

$newServiceResult = New-Service -Name "MyWindowsService"

-BinaryPathName "C:\SomePath\1.0.1\MyWindowsService.exe"

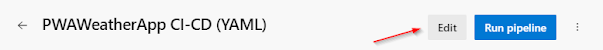

If you have pipelines in Azure DevOps that you don't want to trigger automatically either because they are legacy and remain for reference, not currently being used, or just need to temporarily disable, there are different ways to accomplish this based on the type of pipeline.

CosmosDbConnectionString | AccountEndpoint=https://cosmos-acct-{env}-eus-001.documents.azure.com:443/;AccountKey=abc123...

Using the default configuration, the EnvironmentVariablesConfigurationProvider loads configuration from environment variable key-value pairs after reading appsettings.json, appsettings.Environment.json, and Secret manager. Therefore, key values read from the environment override values read from appsettings.json, appsettings.Environment.json, and Secret manager.

The : delimiter doesn't work with environment variable hierarchical keys on all platforms. For example, the : delimiter is not supported by Bash. The double underscore (__), which is supported on all platforms, automatically replaces any : delimiters in environment variables.

CosmosDb__ConnectionString